For decades, proving that a generic drug works just like its brand-name counterpart meant running expensive, time-consuming clinical trials with human volunteers. Blood samples. Long waits. High costs. But that’s changing-fast. Since 2023, bioequivalence testing has undergone a quiet revolution. New tools powered by artificial intelligence, advanced imaging, and automated labs are replacing old-school methods. The goal? Faster approvals, lower costs, and just as much safety. And it’s not science fiction-it’s happening right now in FDA labs and contract research centers across the U.S.

What Bioequivalence Testing Actually Means

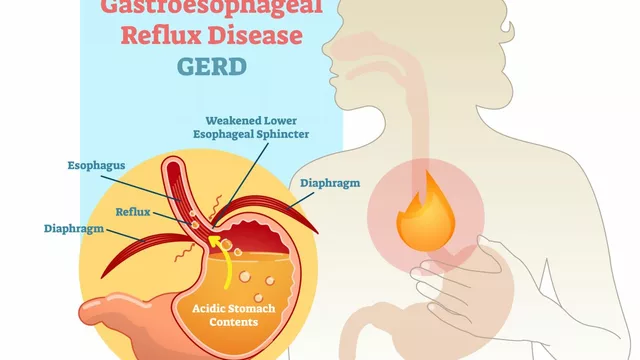

Bioequivalence testing answers one simple question: Does the generic version of a drug get into your bloodstream at the same rate and amount as the brand-name version? If yes, it’s considered bioequivalent. That means it’ll work the same way in your body-same effect, same side effects. For simple pills, this was easy to prove with just a few blood draws after volunteers took the drug. But for complex products-like inhalers, skin patches, or injectables that release medicine slowly over weeks-traditional methods started to fall apart. That’s where the new technologies come in.

AI Is Cutting Study Times in Half

The biggest shift? Artificial intelligence. The FDA launched BEAM (Bioequivalence Assessment Mate) in early 2024, a machine learning tool designed to automate the review of pharmacokinetic data. Before BEAM, reviewers spent up to 80 hours per application sorting through spreadsheets, graphs, and raw data. Now, BEAM does the heavy lifting-identifying patterns, flagging outliers, even predicting bioequivalence outcomes with 92% accuracy. Internal FDA data shows it cuts reviewer workload by 52 hours per application. That’s not just efficiency; it’s a game-changer for speeding up generic drug approvals.

AI isn’t just helping reviewers. It’s also improving how studies are designed. Machine learning models now predict how a drug will behave in the body based on in vitro data alone. This is called virtual bioequivalence. For complex products like PLGA implants-tiny pellets that release medicine over months-traditional clinical trials were nearly impossible to run ethically or affordably. Now, a virtual BE platform, funded by the FDA in August 2024, simulates drug release and absorption using physics-based models. Early results show it can replace clinical endpoint studies for up to 65% of these complex cases.

Advanced Imaging Reveals What Blood Tests Can’t

Some drugs don’t just need to get into the blood-they need to be delivered in a very specific way. Think of an asthma inhaler. The size of the particles, how they stick together, even how they interact with the throat and lungs matters. Traditional dissolution tests couldn’t capture this. Enter advanced imaging.

Technologies like scanning electron microscopy (SEM), optical coherence tomography, and atomic force microscopy infrared spectroscopy now let scientists see drug particles at the micrometer level. They can map how a tablet breaks down, how a patch adheres to skin, or how an inhaler’s aerosol disperses. The FDA’s March 2025 research report highlights the Dissolvit system-a next-gen dissolution apparatus that mimics real human digestive conditions far better than older models. It’s already helping developers of complex inhaled products meet bioequivalence standards that were previously unattainable.

Automation Is Making Labs Faster and More Precise

Behind the scenes, labs are becoming smarter. Since late 2024, the FDA’s Office of Data, Analytics, and Research has rolled out automated sample-handling systems. Robots now prep, label, and process blood samples with zero human error. Digital workflows connect every step-from sample collection to data analysis-without manual transfers. The result? A 37% increase in throughput and a 29% boost in precision. That’s not just faster; it’s more reliable. And it’s being adopted by major CROs worldwide.

Automation isn’t just about speed. It’s about consistency. When a lab in India, Germany, or the U.S. runs the same test, the data should be identical. That’s why the ICH M10 guideline, adopted by the FDA and WHO in mid-2024, became so important. It unified bioanalytical method validation across regions. Before, a method approved in the U.S. might be rejected in Europe due to minor differences in validation criteria. Now, discrepancies have dropped by 62%. That means fewer delays, fewer retests, and faster global access to generics.

Costs Are Rising-But So Are Benefits

Here’s the catch: these new technologies aren’t cheap. A traditional bioequivalence study for a simple tablet still costs $1-2 million. But a tech-enhanced study using AI, imaging, and virtual models? That can run $2.5-4 million. So why bother?

Because for complex products, there’s no alternative. Without these tools, many life-saving generics-like long-acting insulin, transdermal pain patches, or inhaled corticosteroids-would never reach the market. The cost of delay is higher than the cost of innovation. And the ROI is clear: AI-driven approaches reduce study timelines by 40-50% and improve data accuracy by 28%. The global bioequivalence testing market is projected to hit $18.66 billion by 2035, driven largely by biosimilars and AI adoption. As of October 2025, the FDA had approved 76 biosimilars-many of which rely on these advanced methods.

Where the Tech Still Falls Short

Don’t get it wrong-this isn’t a magic bullet. Some products remain stubbornly difficult. Transdermal systems, for example, need better ways to measure skin irritation and adhesion over time. Orally inhaled products still lack standardized methods for charcoal block PK studies. Topical creams and gels require new ways to assess compositional differences beyond just active ingredient concentration.

And then there’s the safety question. Dr. Michael Cohen of ISMP warned in September 2025 that over-relying on in vitro models for narrow therapeutic index drugs-like warfarin or lithium-could be dangerous. If a model doesn’t perfectly mimic human absorption, even a small error could lead to underdosing or toxicity. The FDA is aware. That’s why they’re still requiring clinical data for these high-risk drugs, even as they push forward with virtual models elsewhere.

The U.S.-Only Testing Mandate

In October 2025, the FDA launched a pilot program that requires bioequivalence testing for certain generic drugs to be conducted in the U.S. using only domestically sourced active pharmaceutical ingredients (APIs). This isn’t just about quality control-it’s a strategic move to rebuild domestic manufacturing capacity. It adds complexity for companies that rely on overseas labs, but it also creates new opportunities for U.S.-based CROs and testing facilities. By 2027, the FDA aims to review 90% of generic applications within 10 months. Meeting that goal means scaling up these new technologies fast.

What’s Next? The Road to 2030

The FDA’s research agenda through 2027 includes building validated in vitro models for next-gen drug forms: injectables with nanoparticles, ophthalmic gels, peptide-based therapies, and oligonucleotides. By 2030, experts predict AI-driven bioequivalence testing will handle 75% of standard generic applications. Complex products will rely on hybrid models-combining virtual simulations with targeted human studies only when absolutely necessary.

Regional growth is also accelerating. Saudi Arabia’s Vision 2030 and UAE partnerships with global CROs are building advanced bioequivalence labs in the Middle East. Africa is seeing investment through WHO-backed vaccine initiatives. This isn’t just a U.S. trend-it’s becoming a global standard.

For patients, this means more generic options, faster. For manufacturers, it means clearer paths to market. For regulators, it means smarter, data-driven decisions. The old way of testing bioequivalence isn’t gone-but it’s being replaced, one algorithm, one image, one automated sample at a time.

What is bioequivalence testing and why does it matter?

Bioequivalence testing compares how quickly and completely a generic drug enters the bloodstream compared to the brand-name version. If the two are bioequivalent, they’re considered therapeutically interchangeable. This ensures patients get the same clinical benefit from a cheaper generic, without risking safety or effectiveness.

How is AI changing bioequivalence studies?

AI tools like BEAM automate data analysis, reduce review times by over 50 hours per application, and predict bioequivalence outcomes with high accuracy. Machine learning models can now simulate how drugs behave in the body using in vitro data alone, cutting the need for human clinical trials for many complex products.

Are virtual bioequivalence studies reliable?

For many complex products-like long-acting implants or inhaled drugs-virtual bioequivalence is not just reliable, it’s the only practical option. FDA-funded models have shown they can replace clinical studies in up to 65% of cases. But for narrow therapeutic index drugs, clinical data is still required as a safety backup.

Why is the FDA requiring U.S.-based testing now?

The October 2025 pilot program requires bioequivalence testing for certain generics to be done in the U.S. using domestically sourced active ingredients. This supports U.S. manufacturing resilience and ensures tighter control over data quality and supply chain integrity.

What’s the biggest challenge with new bioequivalence technologies?

The biggest challenge is ensuring these advanced models accurately reflect human physiology-especially for drugs where small differences in absorption can lead to serious side effects. Regulators are proceeding cautiously, requiring clinical validation for high-risk products even as they embrace innovation for others.

For the first time in history, the science of proving a generic drug works isn’t stuck in the 1980s. It’s moving forward-with data, with machines, and with a clear focus on patient safety. The future of affordable medicine isn’t just about cheaper pills. It’s about smarter science.

So let me get this straight-AI is now deciding if my generic pills work? 🤔 Next they’ll be using algorithms to pick my therapist. I’ve seen this movie. Remember when they said self-driving cars were safe? Turns out they couldn’t tell the difference between a pedestrian and a paper bag. Now they’re letting machines review blood data? What’s next-AI prescribing insulin based on a spreadsheet? I’m not taking a pill approved by a bot that learned from 12 guys in a basement in Ohio.

The notion that AI-driven bioequivalence assessments are somehow equivalent to clinical data is not merely misleading-it is scientifically indefensible. Pharmacokinetic variability in human populations cannot be modeled with sufficient fidelity using in vitro surrogates, particularly for drugs with narrow therapeutic indices. The FDA’s reliance on BEAM constitutes an unacceptable erosion of evidentiary standards, and the 92% accuracy metric is meaningless without reporting confidence intervals, sample size, or external validation. This is not innovation. It is institutional negligence dressed in machine learning.

It is fascinating to observe how technological advancement, while accelerating access to essential medicines, simultaneously raises profound ethical and epistemological questions. The reduction of human biological complexity into algorithmic outputs-however sophisticated-risks commodifying health itself. We must ask: Are we optimizing for speed, or for wisdom? The patient’s body is not a dataset to be compressed, but a living system shaped by centuries of evolution. Perhaps the true bioequivalence lies not in blood concentrations alone, but in the integrity of the process that validates them.

They’re replacing human reviewers with AI because they don’t want to pay them. This isn’t progress-it’s corporate cost-cutting with a fancy name. You think a machine can understand why someone metabolizes a drug differently because they’re diabetic, or pregnant, or have a rare gut bacteria strain? Nah. They just want to push more generics out the door before the next quarterly report. And now they’re forcing testing in the U.S.? That’s not about safety. That’s about protecting pharma lobbyists’ profits.

AI is cool and all but why do we keep pretending tech fixes everything? 🤷♀️ I just want my blood pressure med to not kill me. If the FDA’s letting robots sign off on my meds, I’m switching to homeopathy. At least then I know I’m being scammed by someone with a crystal.

beams? sounds like a secret gov program. they already track your meds through your phone. now they wanna control the data too? you think this is about safety? nah. its about who owns your body. they dont want you to know what’s really in the pills. the real active ingredient is surveillance.

So the FDA is using robots to review data but still requires human trials for warfarin? That’s not a safety protocol-that’s a loophole. If the AI is 92% accurate, why not just let it handle everything and let the doctors deal with the 8%? Oh right, because then someone might get sued. This whole system is designed to protect lawyers, not patients. And don’t get me started on the $4M price tag for these "advanced" tests. Someone’s making bank while the rest of us wait for our generics.

I work in a clinical lab that just got one of those automated sample handlers. Honestly? It’s a game changer. No more mislabeled tubes. No more 3 a.m. calls because someone mixed up the samples. The tech isn’t perfect, but it’s way more consistent than humans after a 12-hour shift. I’m not saying we should ditch clinical trials-I’m saying let’s use the tools we have to make the ones we still need better. This isn’t magic. It’s just better science.

Oh look, the Americans are building their own testing labs. How quaint. We in the UK have been doing this properly for decades with actual human oversight and proper regulation. You can’t outsource quality control and call it "resilience." You’re just importing your problems and calling it patriotism. Next you’ll be banning antibiotics because they’re not made in Texas.

This is the most exciting thing I’ve read all year. Imagine a world where life-saving meds like insulin patches or long-acting inhalers are available to everyone faster and cheaper. We’ve been stuck in the 90s for too long. AI isn’t replacing doctors-it’s giving them back time to actually care for patients instead of drowning in spreadsheets. Let’s not fear progress. Let’s accelerate it.

The balance between innovation and caution is delicate. While virtual models reduce the need for human trials, they must be validated against real-world outcomes over time. We should not rush to replace the gold standard, but rather layer new tools upon it. The goal is not to eliminate human testing, but to minimize unnecessary exposure while maximizing safety. This evolution, if guided by humility, could serve as a model for other fields of medicine.

U.S. testing only. U.S. API only. No exceptions. No outsourcing. No compromises. This is national security. The supply chain must be domestic. The data must be domestic. The responsibility must be domestic. Anything less is weakness. The world will follow. They always do.

they say ai is 92% accurate but they never say what data it was trained on. what if it was trained on data from companies that own the brand name drugs? what if it’s rigged to say generics are not equivalent? what if this is just a way to block competition and keep prices high? they’ve been lying to us for years. this is just the next lie.

Just want to say thank you to everyone working on this. I’ve been on a generic med for 10 years and it’s kept me alive. Knowing that the system is getting smarter and faster means my kids might get access to lifesaving drugs before they even need them. Keep pushing. We’re with you.

So now my asthma inhaler is being tested by a robot that doesn’t even know what a cough feels like. 😭